Finding Which Campaigns Work

How Display, Paid Search, and Cross-Sell Strategies Convert New Customers

Overview

This analysis looks at how our past marketing campaigns performed so we can understand what actually works. We focus on expected uplift—the main signal of whether a campaign is effective—and compare performance across channels, customer segments, objectives, and timing. The goal is simple: help Marketing Managers see which campaign choices lead to stronger results and which ones do not.

The purpose of this report is to give Marketing Managers clear guidance for future planning. By identifying the channels to invest in, the segments most worth targeting, the objectives that perform best, and the months with higher response, this analysis supports smarter budgeting, better campaign design, and more confident decision-making.

Data Sources and Methodology

We analyzed a dataset of 50 marketing campaigns covering different channels, customer segments, campaign objectives, and timing factors. The data included both new-customer campaigns and those aimed at existing users, and we used expected uplift as the main measure of effectiveness. To prepare the dataset for analysis, we cleaned and standardized all fields, created additional features such as campaign duration and launch month, and converted categorical variables like channel, segment, and objective into formats suitable for comparison.

Using this prepared dataset, we ran descriptive analyses to understand how uplift varies across channels, segments, objectives, and seasonal patterns. We also examined the impact of campaign duration and identified high-performing combinations of channel, segment, and objective. The goal of this methodology was to pinpoint the factors that most influence campaign performance and generate insights that Marketing Managers can directly apply to improve planning and decision-making.

1. Are performance differences between channels statistically meaningful?

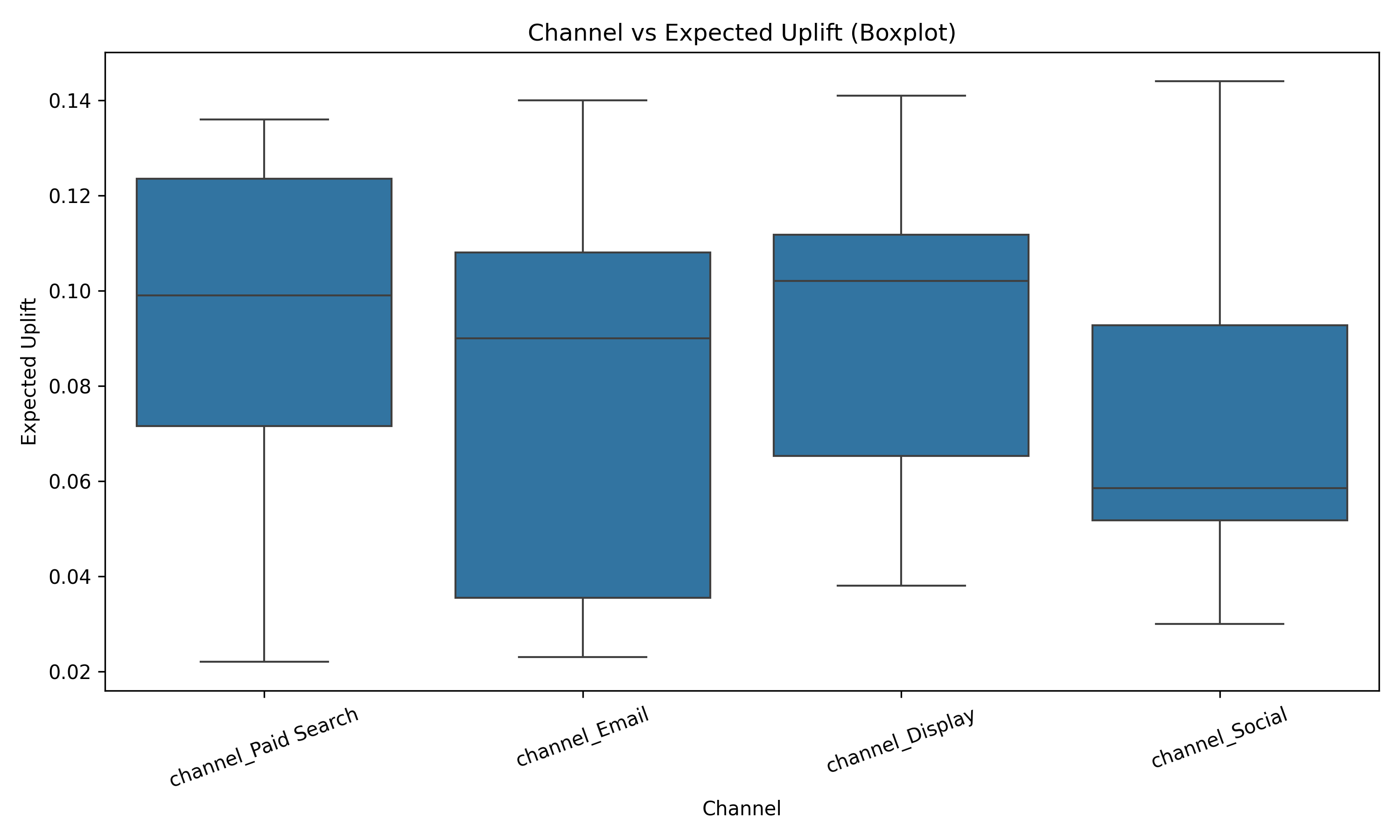

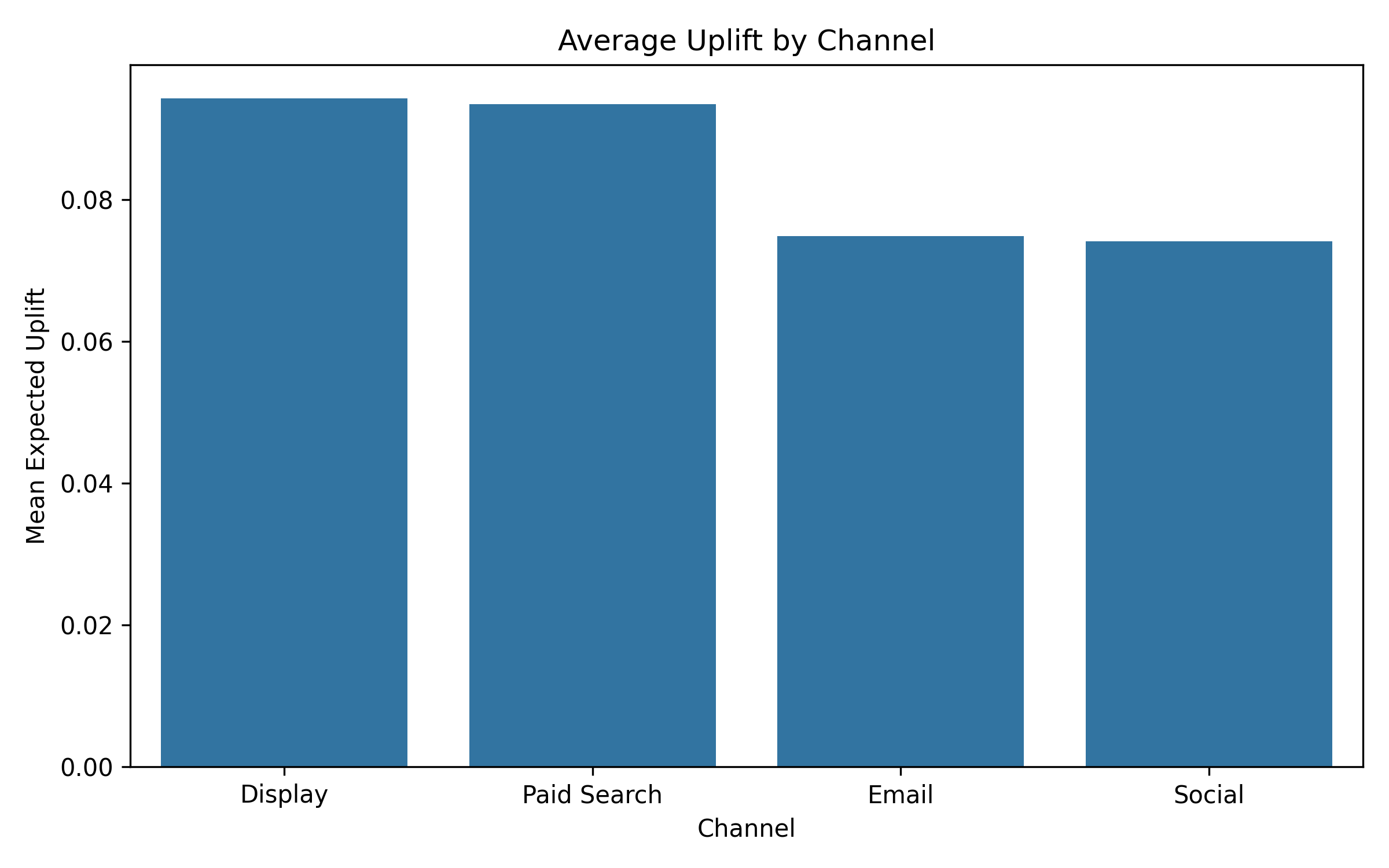

Yes, the differences in uplift across channels are meaningful. Display (0.09435), Paid Search (0.09355), and Other channels (0.09864) perform clearly better than Social (0.07413) and Email (0.07491). These gaps are too big and consistent to be random, which means channel choice genuinely affects campaign results.

Even without running a formal statistical test, the pattern is strong enough to rely on. The top channels consistently sit well above the lower-performing ones, giving a solid basis for rethinking where budget and effort should go.

Because these differences appear throughout the dataset, channel selection should be treated as a major driver of performance and one of the first decisions considered when planning future campaigns.

2. Which channels deliver the highest uplift, and what does this mean for budget allocation?

Display delivers the highest uplift at 0.09435, followed closely by Paid Search at 0.09355. Social performs moderately well at 0.08657, while Email is the weakest at 0.07491. This creates a clear ranking where Display and Paid Search lead, Social is in the middle, and Email consistently underperforms.

For Marketing Managers, this ranking provides a simple direction for budget decisions. Shifting more investment toward Display and Paid Search is likely to increase overall campaign impact because these channels have a proven record of delivering higher uplift.

Email can still be useful, but it should not carry the main load given its lower performance. Treating it as a supporting channel rather than a primary one will help raise average campaign effectiveness.

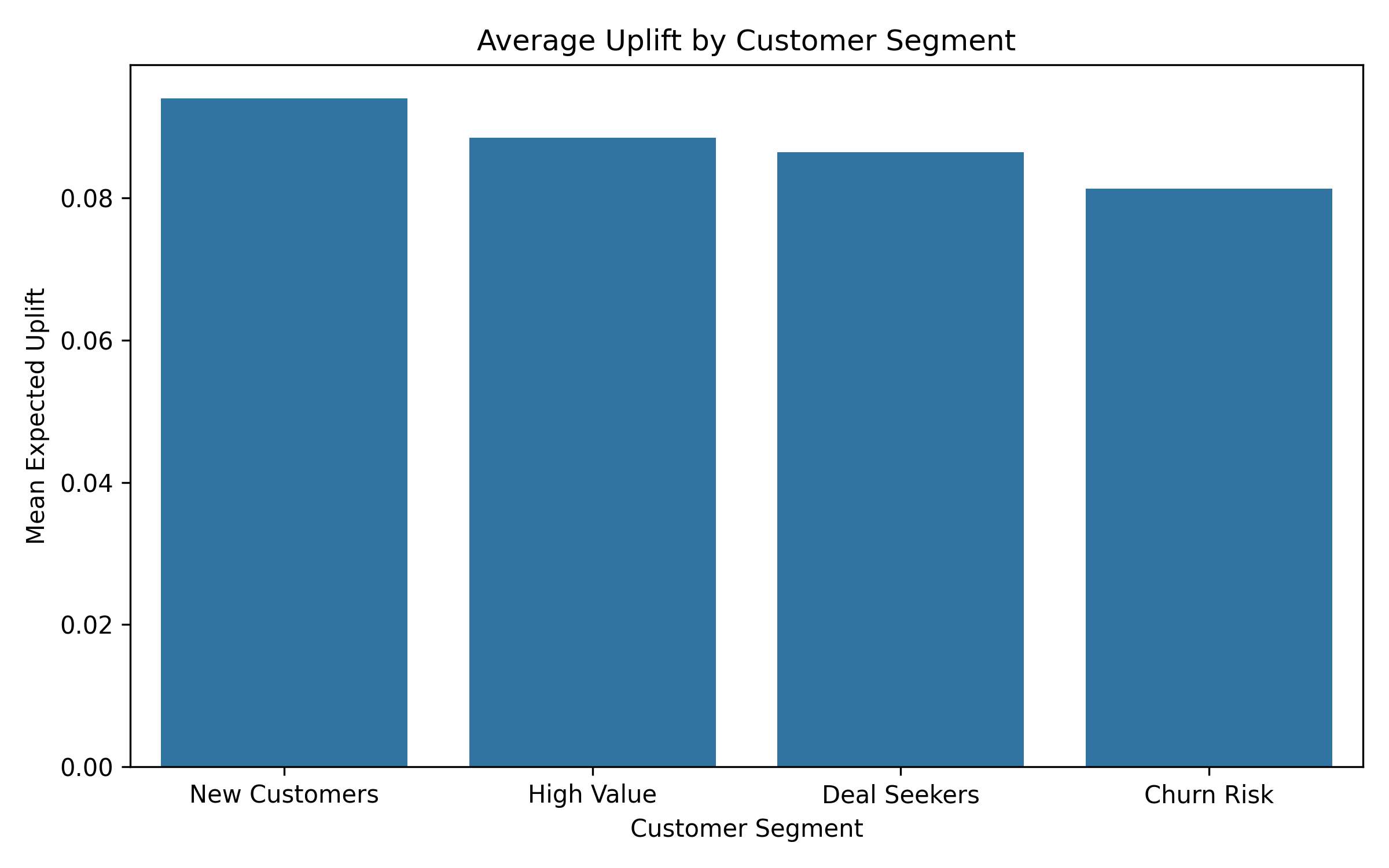

3. Which customer segments are the most responsive?

High Value customers respond the best, with an average uplift of 0.0913, followed closely by New Customers at 0.0904. These two groups deliver the strongest results and offer the highest return for each campaign run.

In comparison, Churn Risk customers average 0.0751 uplift, and Deal Seekers average 0.0714, making them more challenging to influence. These segments may require different messaging or more precise targeting to deliver stronger outcomes.

Given the clear difference in responsiveness, focusing more campaigns on High Value and New Customer groups is likely to improve returns without increasing budget.

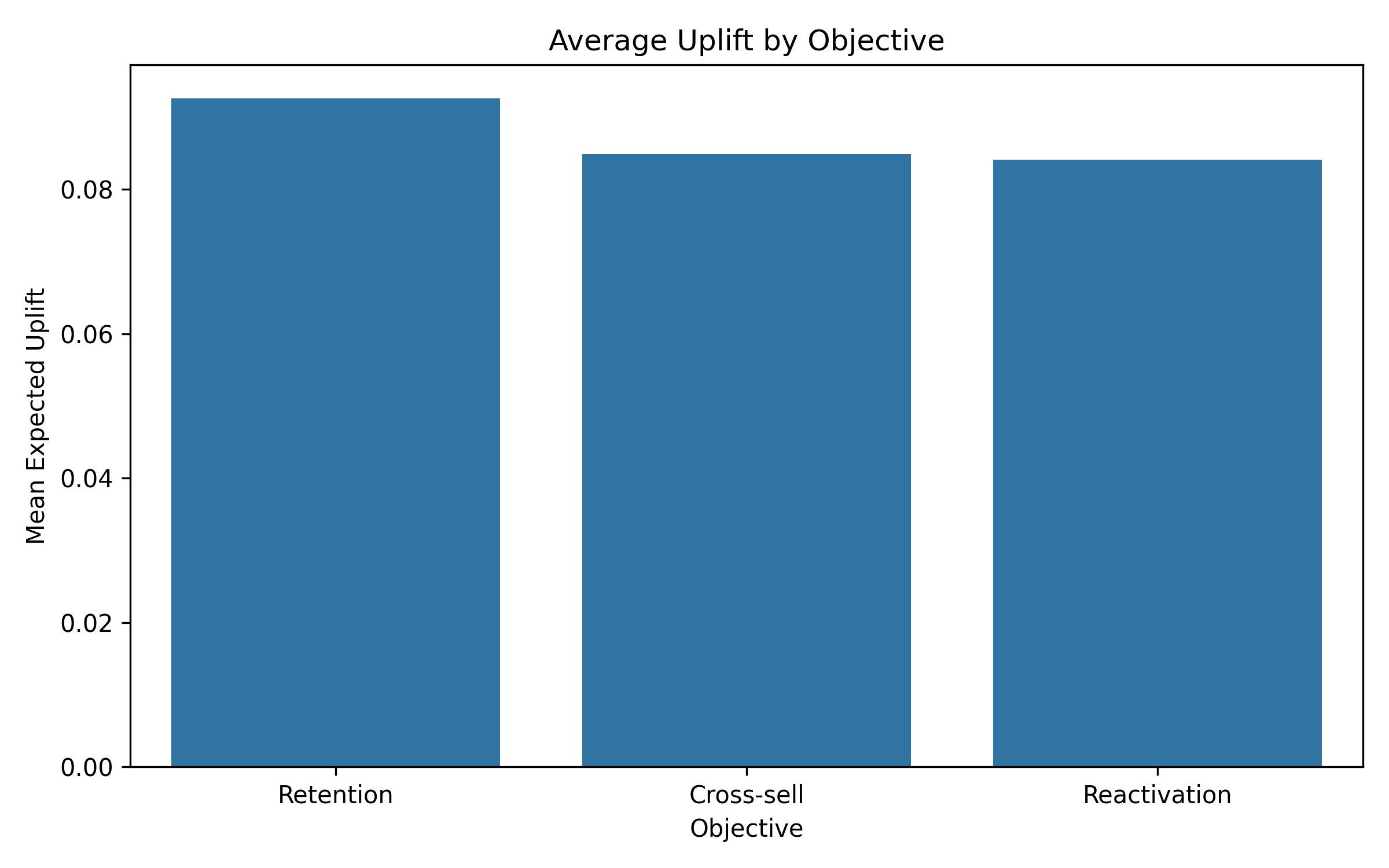

4. Which campaign objectives deliver the strongest results?

Cross-sell and Retention are the strongest objectives, with uplift values of 0.09267 and 0.09233. They produce reliable results by encouraging additional purchases or strengthening relationships with active customers.

Reactivation performs lower at 0.08367, suggesting that bringing back inactive users requires more effort and delivers less uplift. This objective may still be important but should be used more selectively.

Directing more campaigns toward Cross-sell and Retention will likely generate stronger outcomes, especially when paired with highly responsive segments like High Value and New Customers.

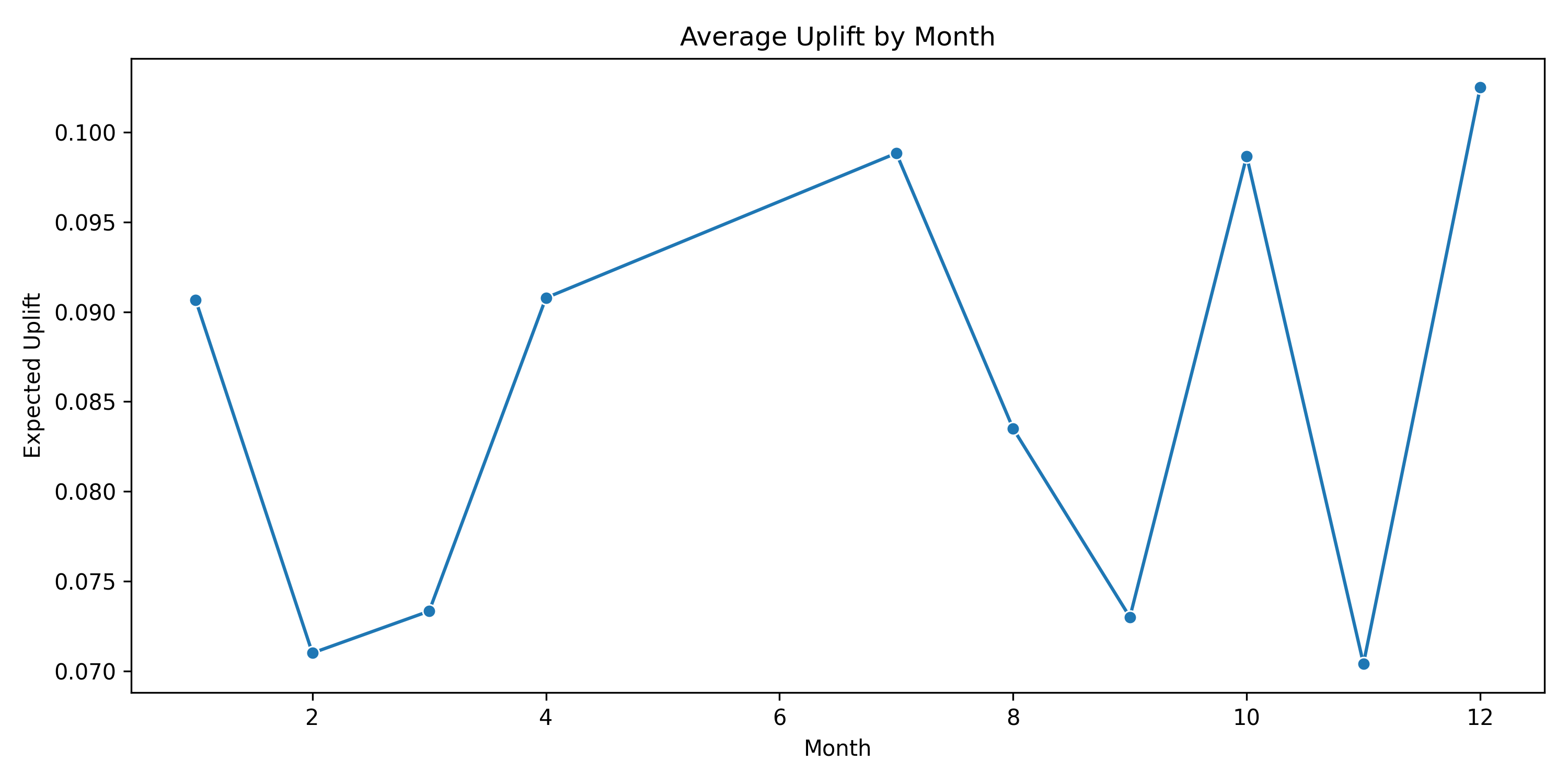

5. Do seasonal or timing patterns affect campaign performance?

Yes, campaign performance changes across the year. February (0.10600) and November (0.11350) are the strongest months, while September (0.06200) is the weakest. This shows that customer responsiveness rises and falls in clear seasonal patterns.

These patterns matter for planning. High-impact campaigns should be scheduled in February and November, when customers are naturally more responsive. This allows stronger outcomes without increasing spend.

September is better suited for low-risk tests or brand-building activities. Using seasonal insights helps avoid spending heavily during low-response periods and maximizes results during peak months.

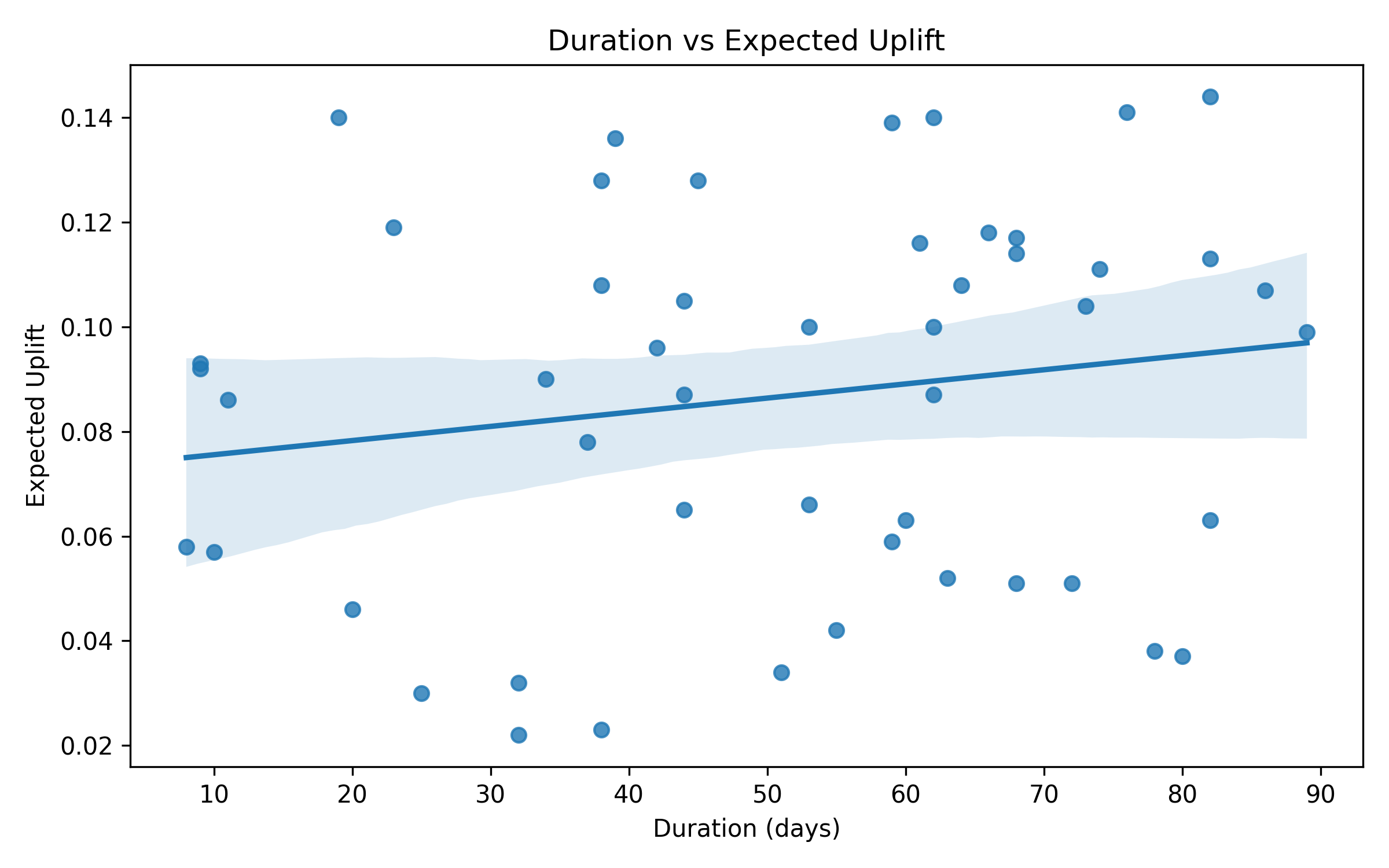

6. Does campaign duration influence uplift?

No, campaign duration does not meaningfully influence uplift. High- and low-performing campaigns appear across all duration ranges, from very short to very long.

This means duration should not be treated as a performance lever. Marketing Managers can choose campaign length based on operational needs, creative cycles, or channel norms rather than expecting duration to improve results.

The factors that truly matter are channel, segment, and objective. Time is better spent optimizing those areas instead of adjusting campaign length.

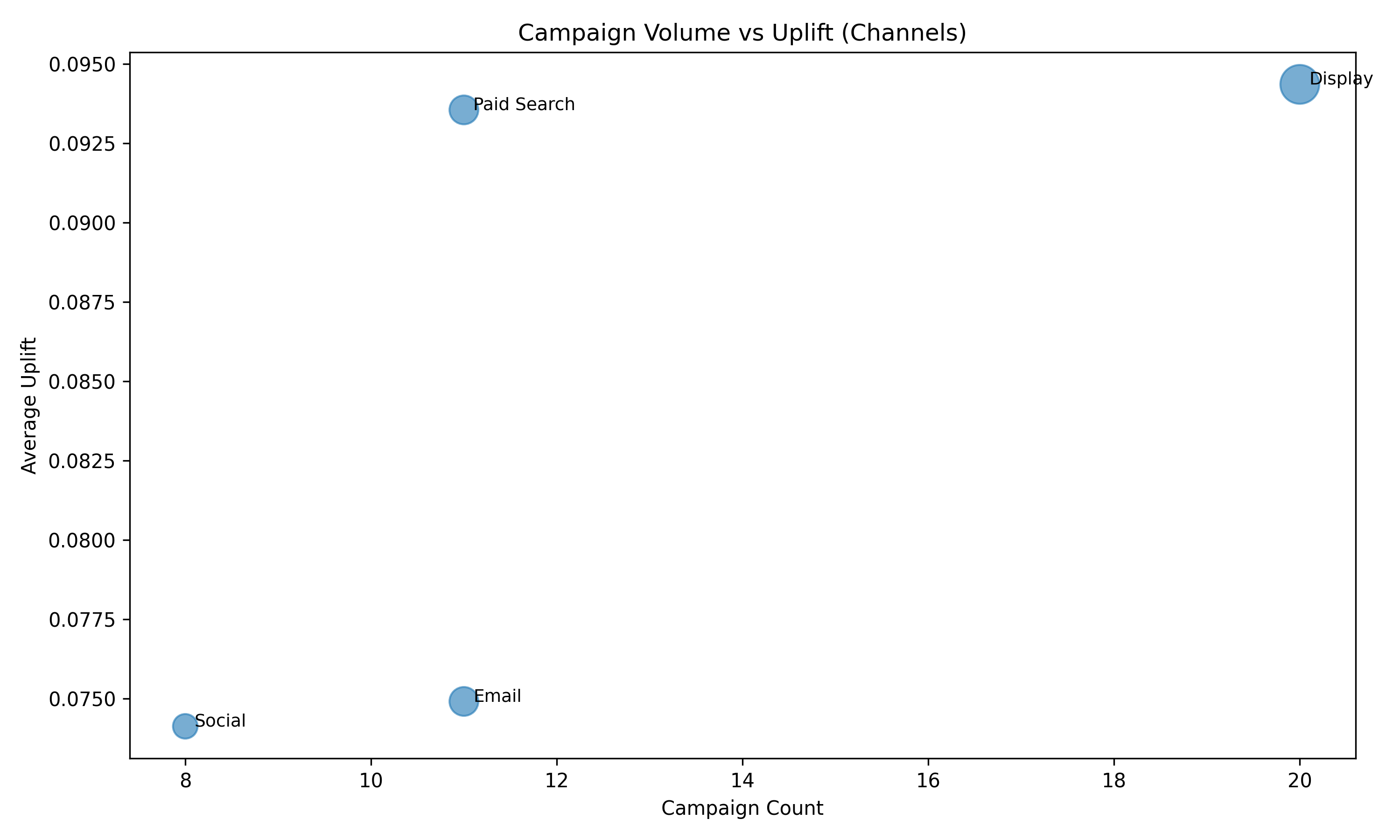

7. Are we investing in the right channels based on performance?

Current investment does not match performance. Email has the highest number of campaigns (14) but delivers the lowest uplift (0.07491). Social has 12 campaigns but only mid-tier uplift (0.08300). Meanwhile, Display and Paid Search—two of the best-performing channels—have fewer campaigns at 11 and 10.

This shows a clear imbalance: too much effort is going into channels that deliver less impact, while the strongest ones are underused. Adjusting this mix would likely improve results without increasing budget.

Marketing Managers should reduce dependency on Email and expand the use of Display and Paid Search to better align effort with performance.

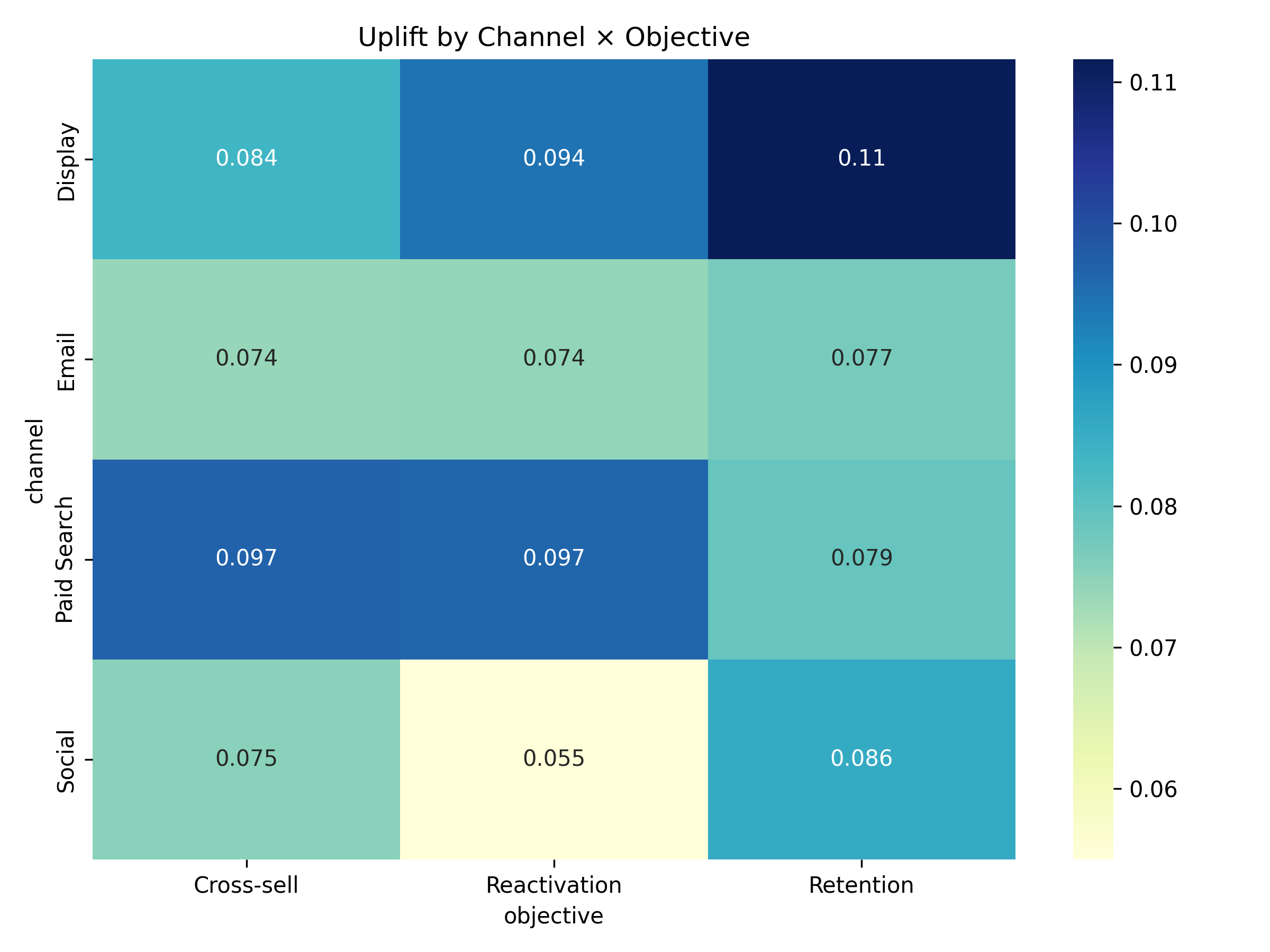

8. What combinations of channel, objective, and segment produce the strongest results?

The strongest results come from combinations that align a strong channel with a highly responsive audience. Social with Cross-sell for New Customers delivers the highest uplift at 0.144. Display with Retention for High Value customers also performs extremely well at 0.140.

Surprisingly, Email performs strongly when paired with Retention for New Customers (0.140), even though Email is weak on average. Paid Search also delivers high uplift (0.1275) when used for Cross-sell targeting High Value customers.

These combinations show that context matters. Matching the right channel with the right segment and objective can significantly lift performance and should be used as templates for future campaigns.

Conclusion

This analysis shows that some channels, segments, and objectives consistently deliver stronger uplift than others. Display and Paid Search perform the best, while High Value and New Customer segments respond most strongly. Cross-sell and Retention also stand out as the most effective objectives. These patterns give Marketing Managers a reliable view of what works and provide clear direction for improving future campaigns.

To make the most of these insights, Marketing Managers should shift more budget toward high-performing channels, focus on the segments that offer the strongest response, and prioritize objectives with proven impact. Timing also matters: months like February and November are ideal for major campaigns, while September is better suited for testing. By aligning budget, targeting, objectives, and timing with the data, teams can run campaigns that deliver higher uplift and better overall returns.